Knowledge

Explore the latest insights from the world of machine defined web data.

Knowledge

Explore the latest insights from the world of machine defined web data.

Learn how Exiger uses the Webz.io News API to search 120K+ news websites for adverse news events, uncovering risks across 1.3 million companies and people.

Discover how Lunar can help you proactively monitor the dark web, identify potential threats, and take action in real-time.

We surveyed leading U.S. risk management and RegTech companies to learn about the biggest monitoring challenges they are facing.

Find out how to monitor Monitoring Emerging Threats to Brands on Alternative Social Media.

Discover how entrepreneurs, researchers, and Fortune 500 enterprises are using structured web data.

Tune in to learn how to monitor financial fraud by using data from the dark web.

Learn how can you maximize the coverage of the web in such a way that will deliver the best results.

Learn how Webz.io extracts data at scale while keeping you safe.

Keeping up with blogs is crucial to tracking trends, competition and customer sentiments.

Online discussion forums are a critical source of insights about customer sentiment, trends and competition.

Whether you’re a researcher, a student and or an enterprise, you need a large, relevant and accurate dataset.

In today’s digital age, gathering feedback from customers can be an overwhelming task.

Watch our special webinar with DataRobot where we discuss how to tell if a story will go viral.

Learn how healthcare organizations investigate cyber threats using open and dark web intelligence.

How health, finance, and other sectors leverage dark web data to mitigate digital risks in the post COVID-19 era.

Find out how to prevent financial fraud by detecting and responding to cybercrimes.

Find out how to monitor and prevent potential cyber threats.

Learn how financial institutions are leveraging web data to predict financial market movements.

Monitoring the dark web is a challenging task because of various reasons including its elusive and unindexed content.

Learn how deep digital risk analysis automation can cut costs, reduce risks, and drive efficiency in risk management, governance, and compliance.

How GetSentiment used Webz.io's data to help businesses and understand their customer's experiences better.

In a world where a ransomware attack occurs every 11 seconds, no organization is safe, including the world’s biggest companies.

How cyber security organizations use Webz.io powerful dark web data for cyber threat awareness & intelligence.

How to acquire the web data you need with a budget you can afford?

Learn how financial institutions are leveraging web data to predict financial market movements.

How to increase your effective web data coverage at any scale? Read it right here.

Are you a developer, executive, or researcher? Follow these 5 steps to leveraging the open web as a data source.

How Signal expanded its OSINT coverage with Webz.io’s structured web data feeds covering millions of sources.

Discover how DataRobot used Webz.io’s data feeds to identify and help viral content without using clickbait headlines.

How Webz.io helped Keyhole to monitor mentions of brands with web data feeds from news, blogs and forums.

See how Webz.io’s news data feed powers the add-on that differentiate between fake and fact-checked news.

Learn how Webz.io’s data helps Mention provide customers with key financial insights in real time.

How Notified used Webz.io’s discussion forum data to become the leading social monitoring solution in the Nordic.

Read how Webz.io’s quality data and coverage boosted Kantar Media’s FishEye Social Monitoring Tool.

Discover how Buzzilla, unlike leading pollsters, called the election using Webz.io's Data.

How Exiger Uses Webz.io’s News API to Uncover Hidden Risks in Over 1M Companies and People

Learn how Exiger uses the Webz.io News API to search 120K+ news websites for adverse news events, uncovering risks across 1.3 million companies and people.

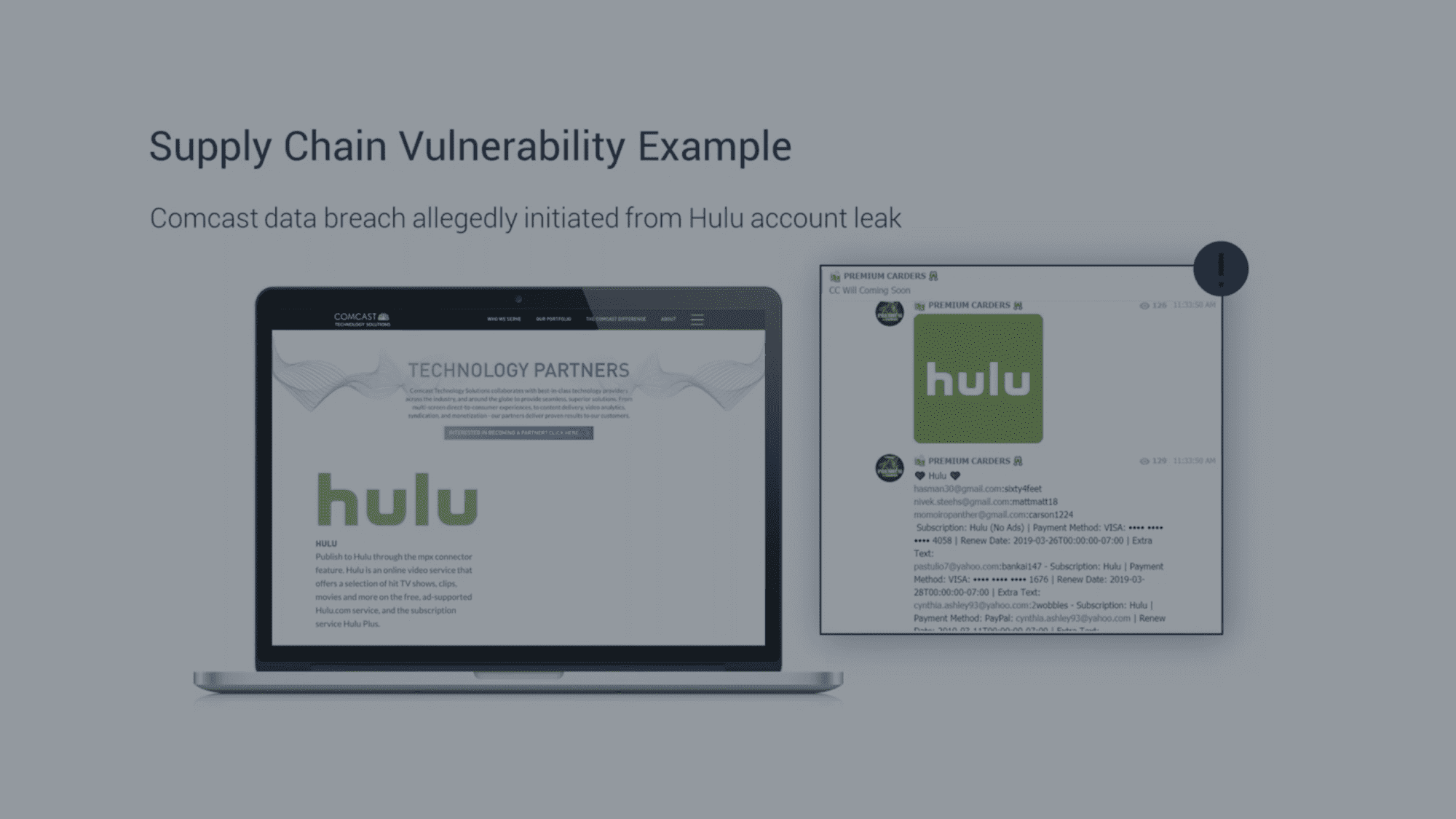

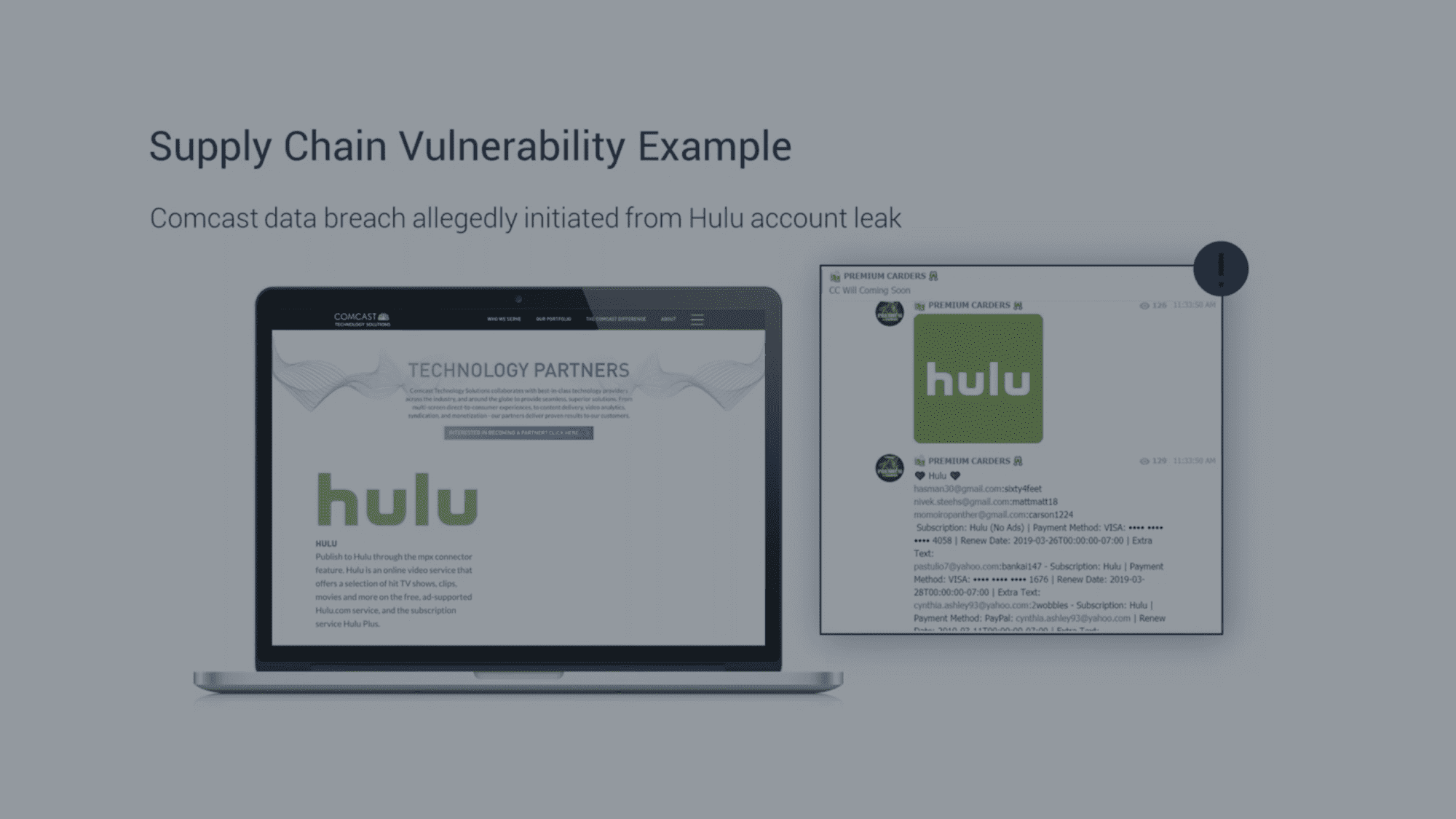

Lunar - Monitoring the Dark Web for Ransomware and Supply Chain Risks

Discover how Lunar can help you proactively monitor the dark web, identify potential threats, and take action in real-time.

The Top Challenges of Monitoring Regulatory Risk in 2022

We surveyed leading U.S. risk management and RegTech companies to learn about the biggest monitoring challenges they are facing.

Monitoring Emerging Threats to Brands on Alternative Social Media

Find out how to monitor Monitoring Emerging Threats to Brands on Alternative Social Media.

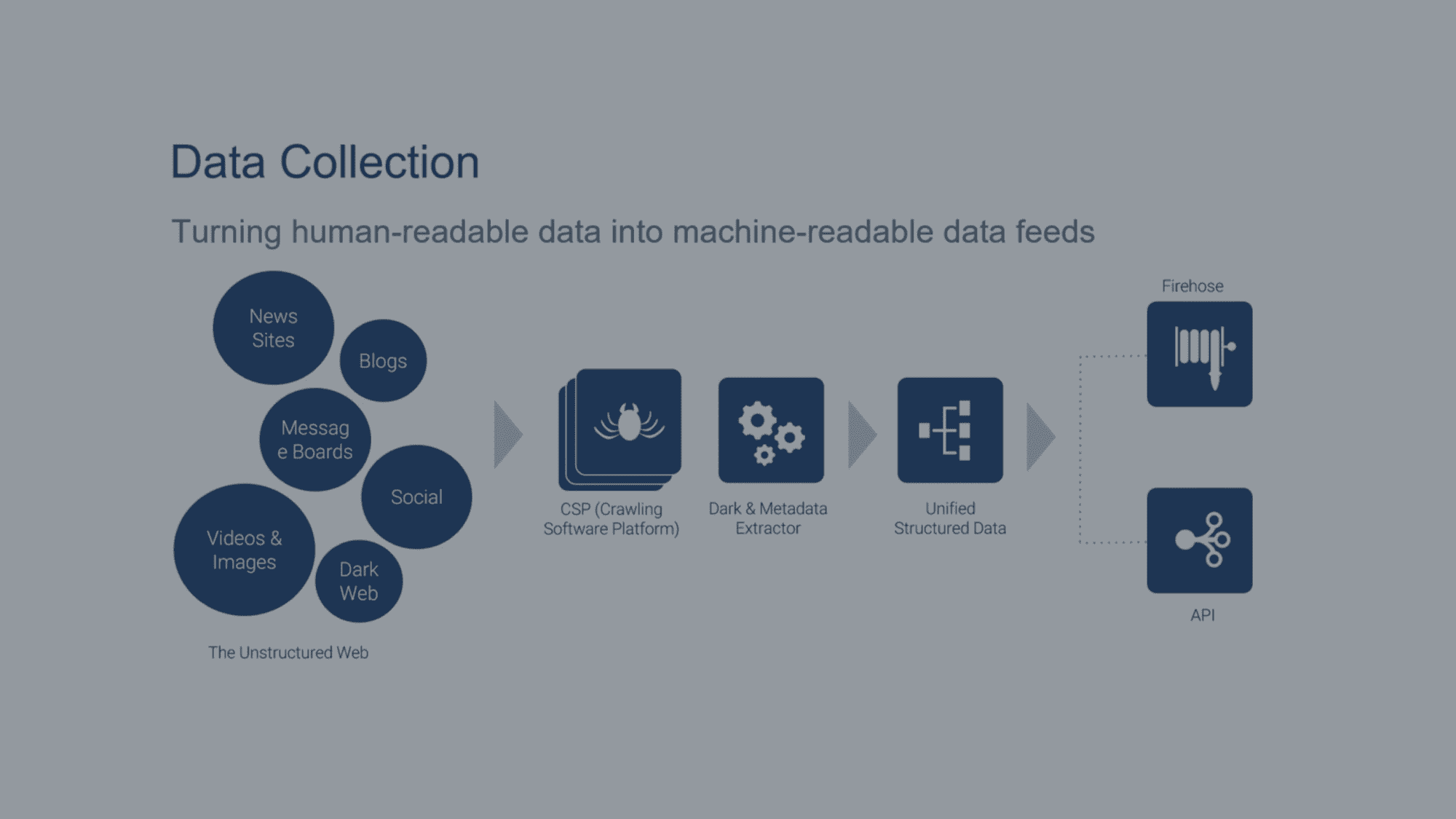

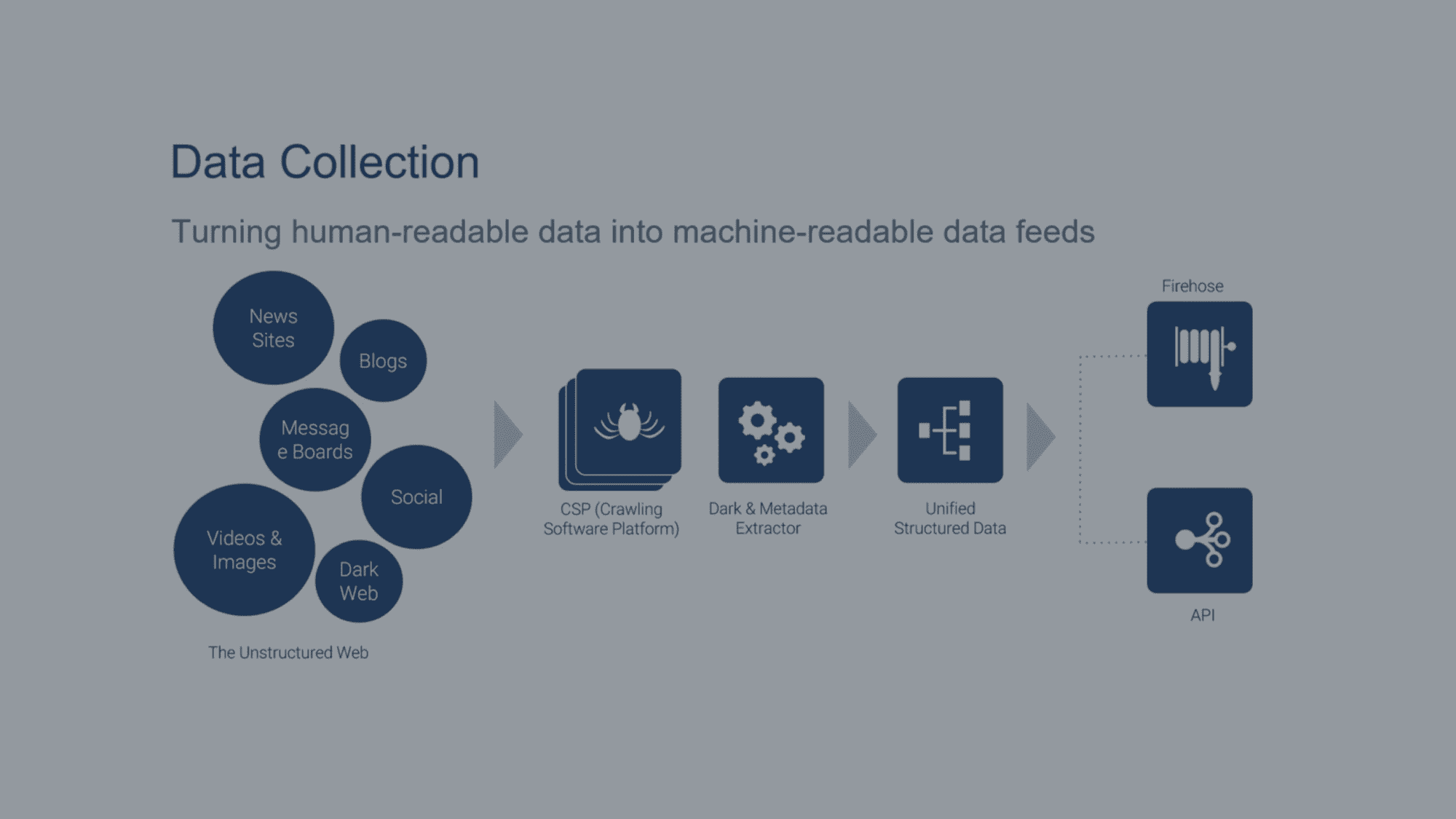

The Webz.io Guide to Structured Web Data

Discover how entrepreneurs, researchers, and Fortune 500 enterprises are using structured web data.

Webz.io & Echosec: Financial Fraud and The Hidden Internet

Tune in to learn how to monitor financial fraud by using data from the dark web.

Get Access to Comprehensive Coverage of the Web

Learn how can you maximize the coverage of the web in such a way that will deliver the best results.

How Webz.io Extracts Data from the Dark Web

Learn how Webz.io extracts data at scale while keeping you safe.

The Advantages of a Blog Search API

Keeping up with blogs is crucial to tracking trends, competition and customer sentiments.

The Advantages of a Forum Monitoring API

Online discussion forums are a critical source of insights about customer sentiment, trends and competition.

Building Your Own Datasets for Machine Learning or NLP Purposes

Whether you’re a researcher, a student and or an enterprise, you need a large, relevant and accurate dataset.

The Different Services Available for Aggregating Business Reviews

In today’s digital age, gathering feedback from customers can be an overwhelming task.

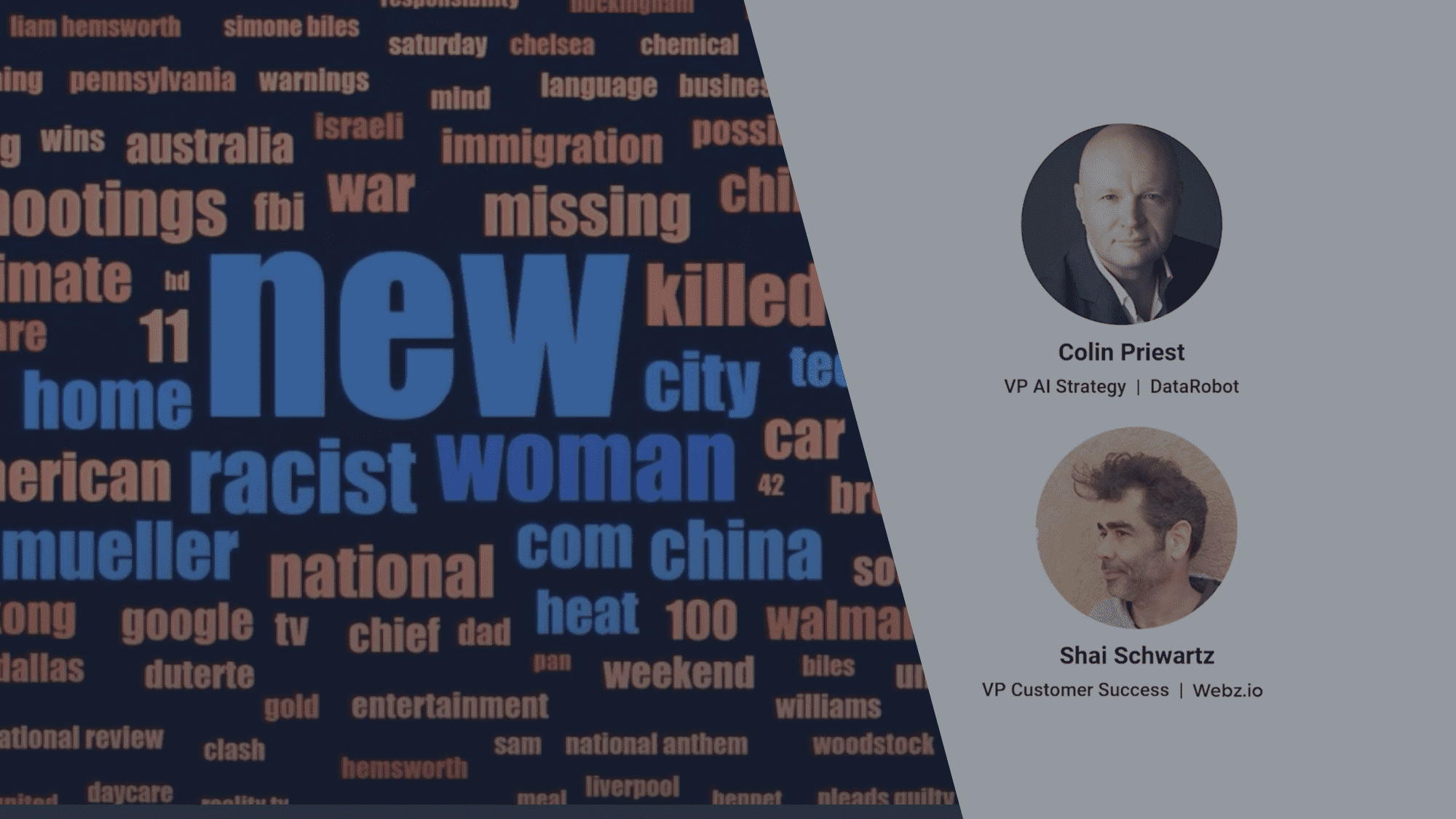

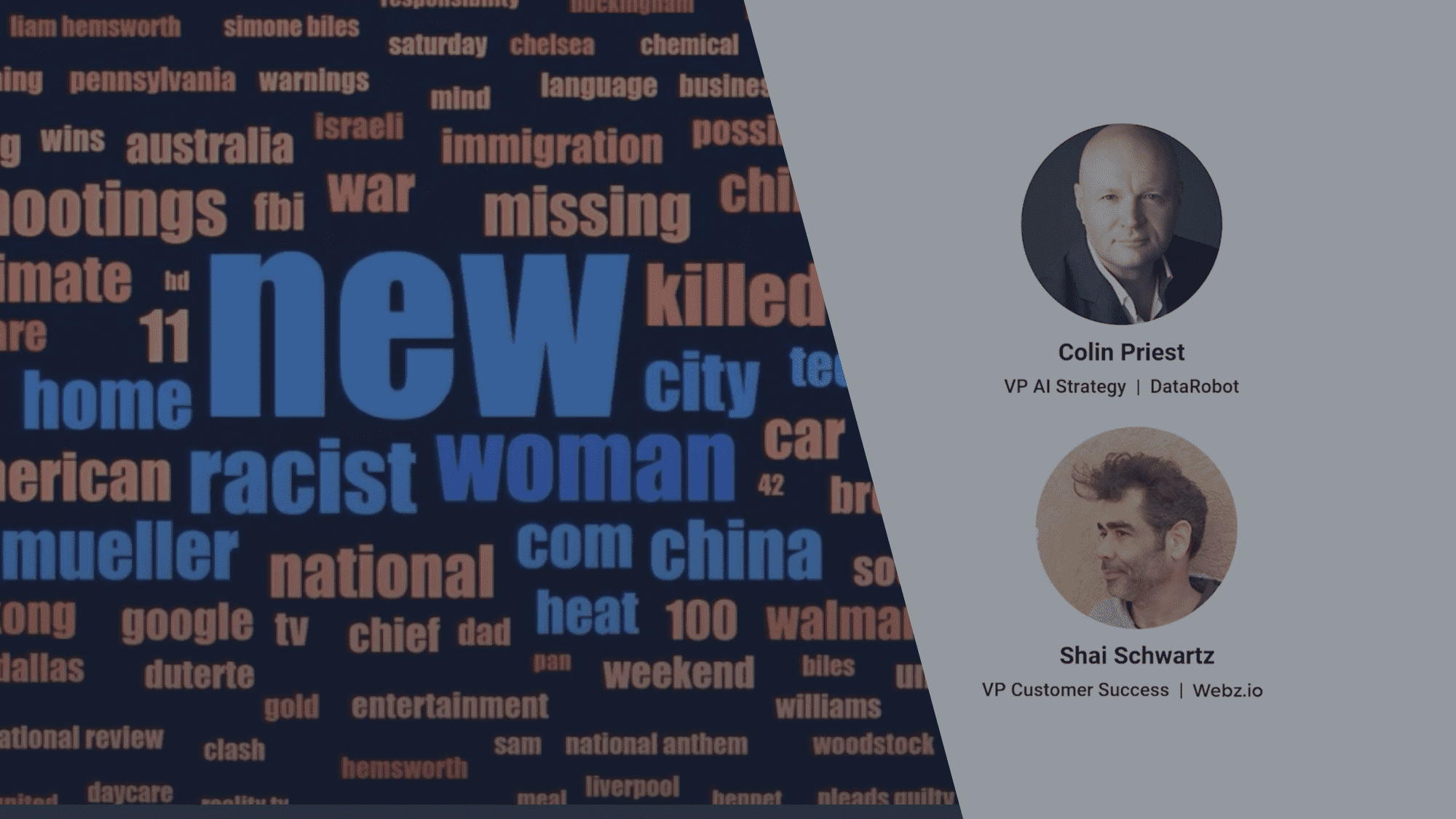

Webz.io and DataRobot: How to Predict Your Virality

Watch our special webinar with DataRobot where we discuss how to tell if a story will go viral.

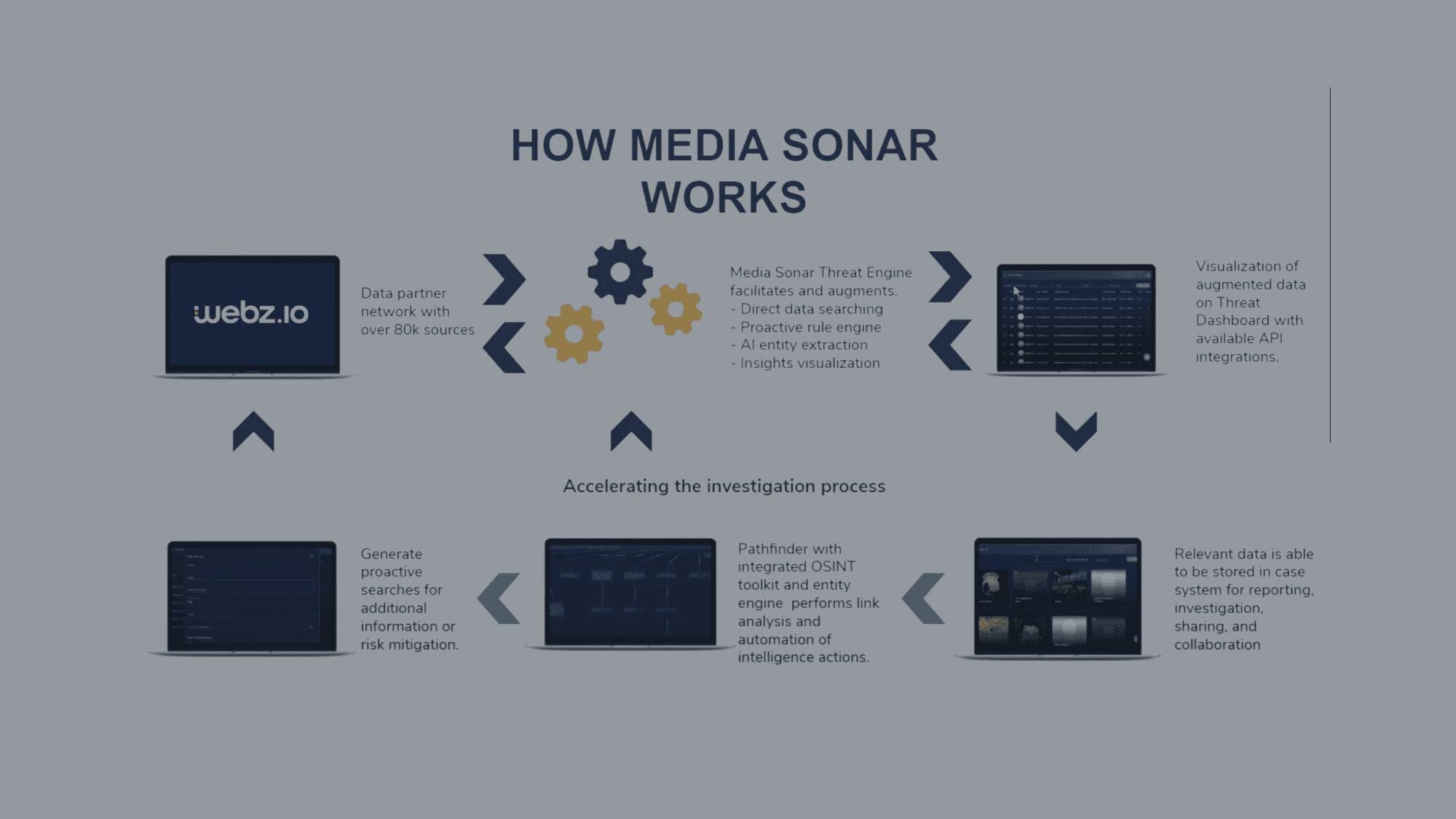

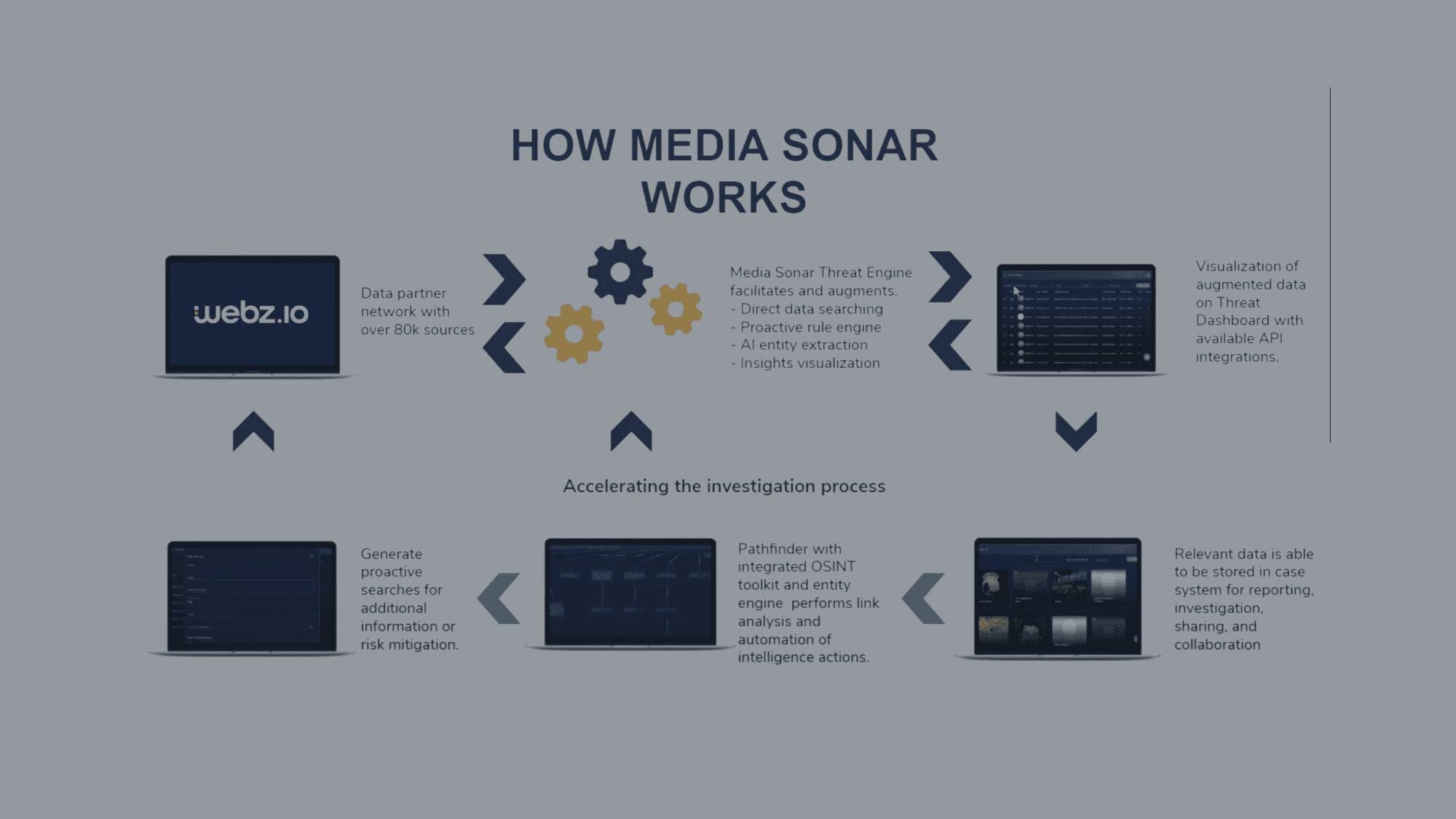

Webz.io and Media Sonar: Cyber Security Threats in Healthcare

Learn how healthcare organizations investigate cyber threats using open and dark web intelligence.

Digital Risk in The Post Pandemic Era

How health, finance, and other sectors leverage dark web data to mitigate digital risks in the post COVID-19 era.

Detect and Respond to Cyber Crimes

Find out how to prevent financial fraud by detecting and responding to cybercrimes.

Analyze Like a Hacker

Find out how to monitor and prevent potential cyber threats.

Predict Financial Movements with Web Data

Learn how financial institutions are leveraging web data to predict financial market movements.

The Tools You Can Use to Monitor the Dark Web

Monitoring the dark web is a challenging task because of various reasons including its elusive and unindexed content.

The Future State of 3rd Party Risk Analysis

Learn how deep digital risk analysis automation can cut costs, reduce risks, and drive efficiency in risk management, governance, and compliance.

GetSentiment Uses Webz.io to Enhance its Sentiment Analysis

How GetSentiment used Webz.io's data to help businesses and understand their customer's experiences better.

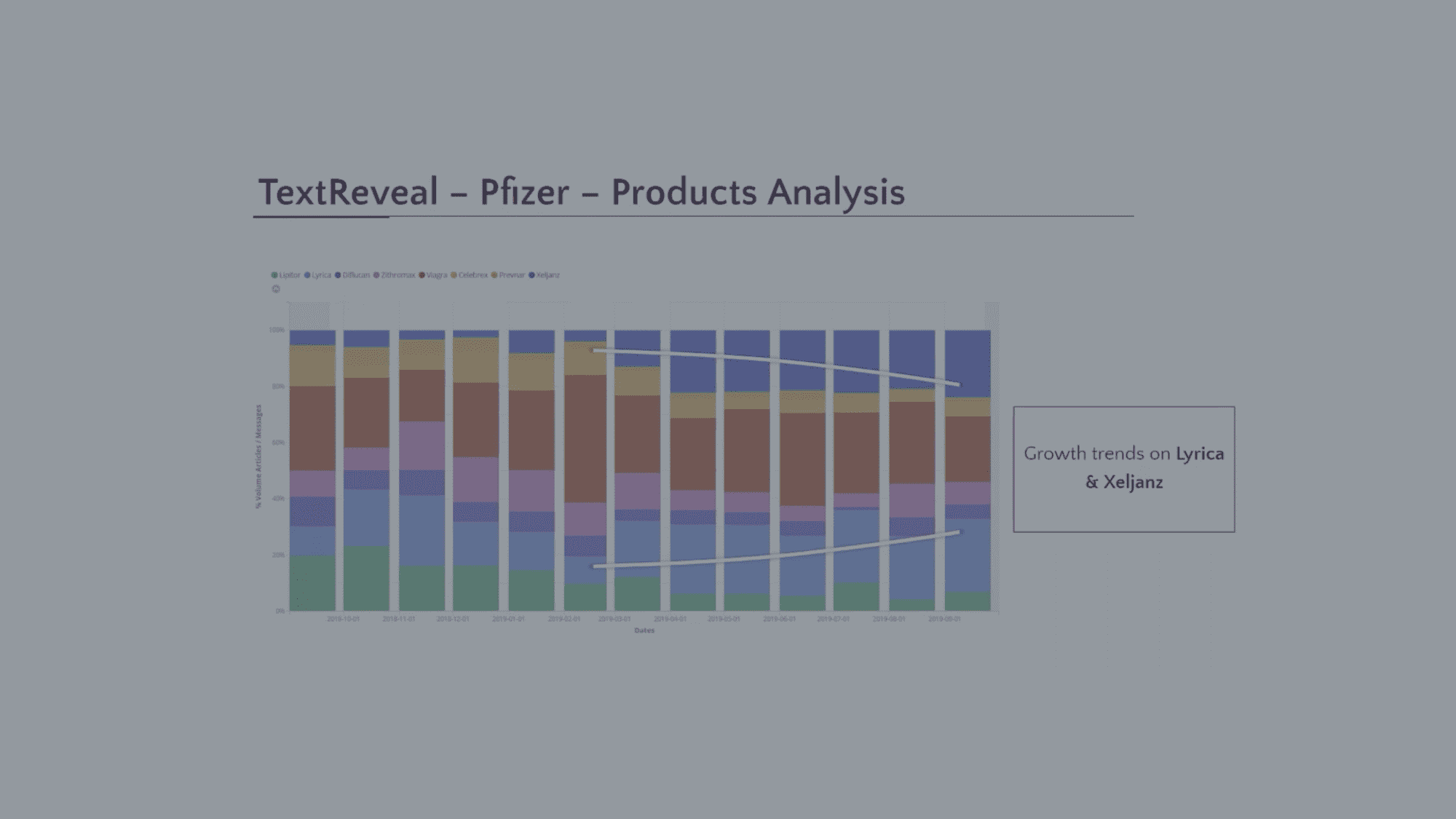

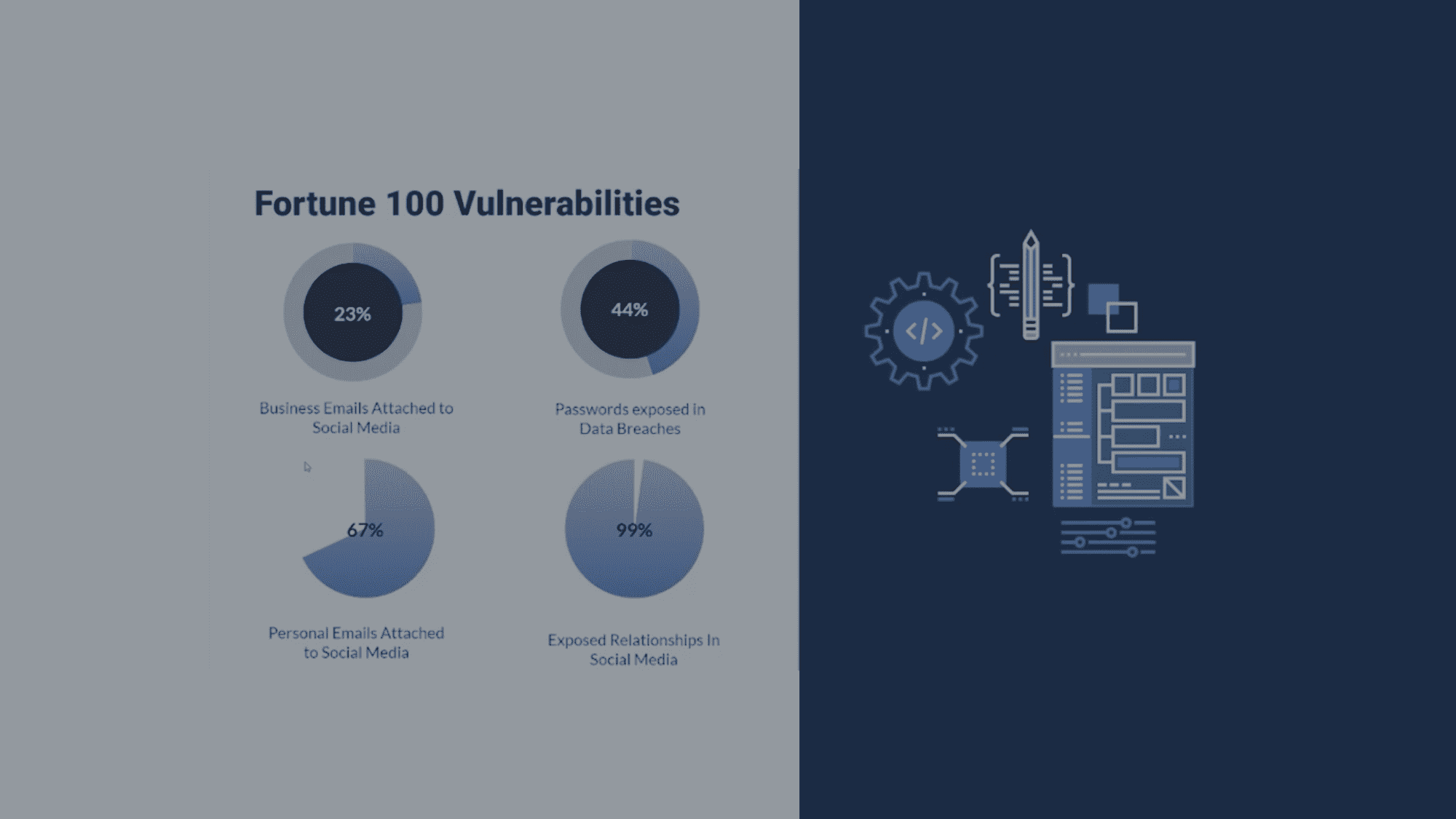

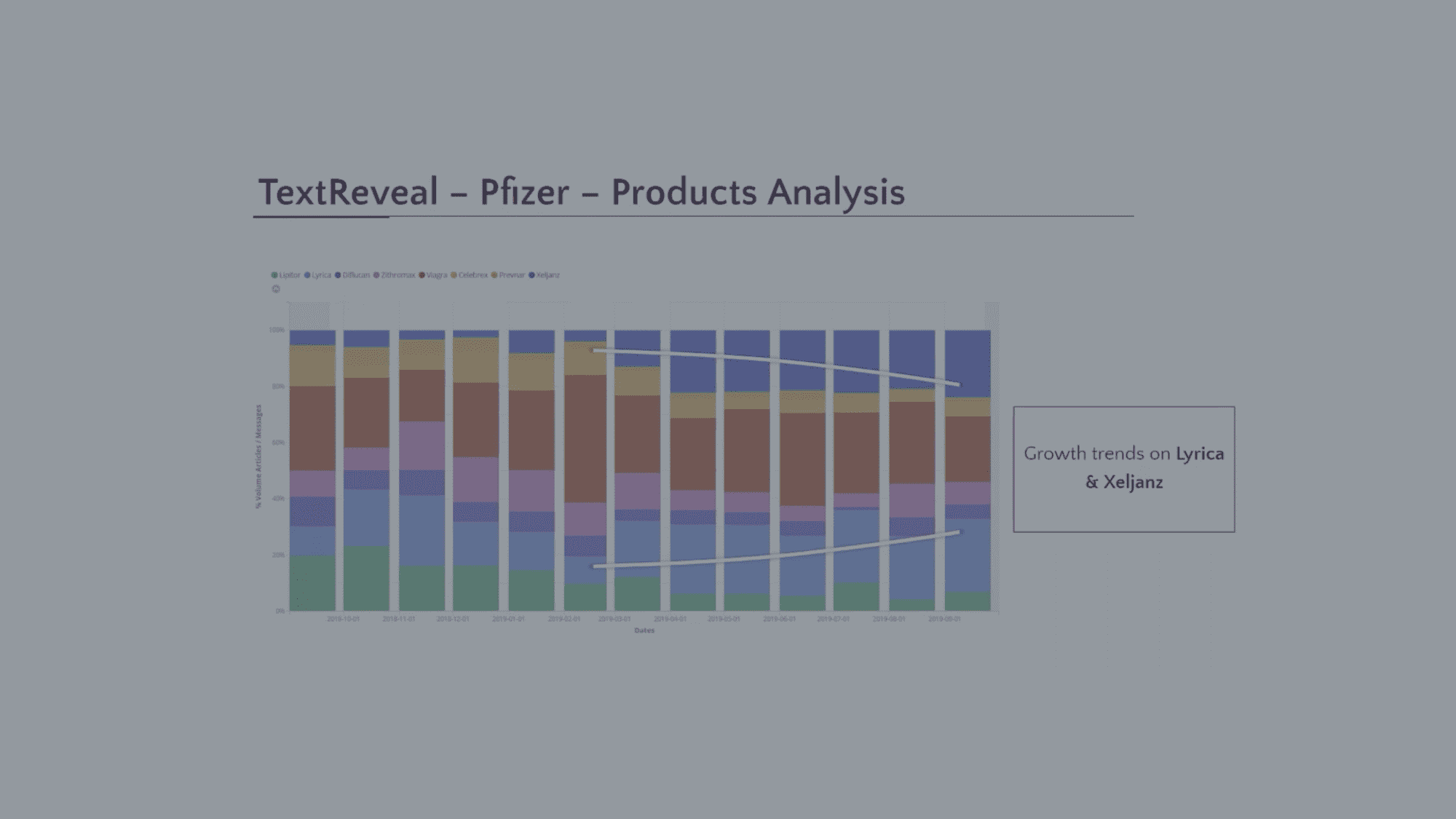

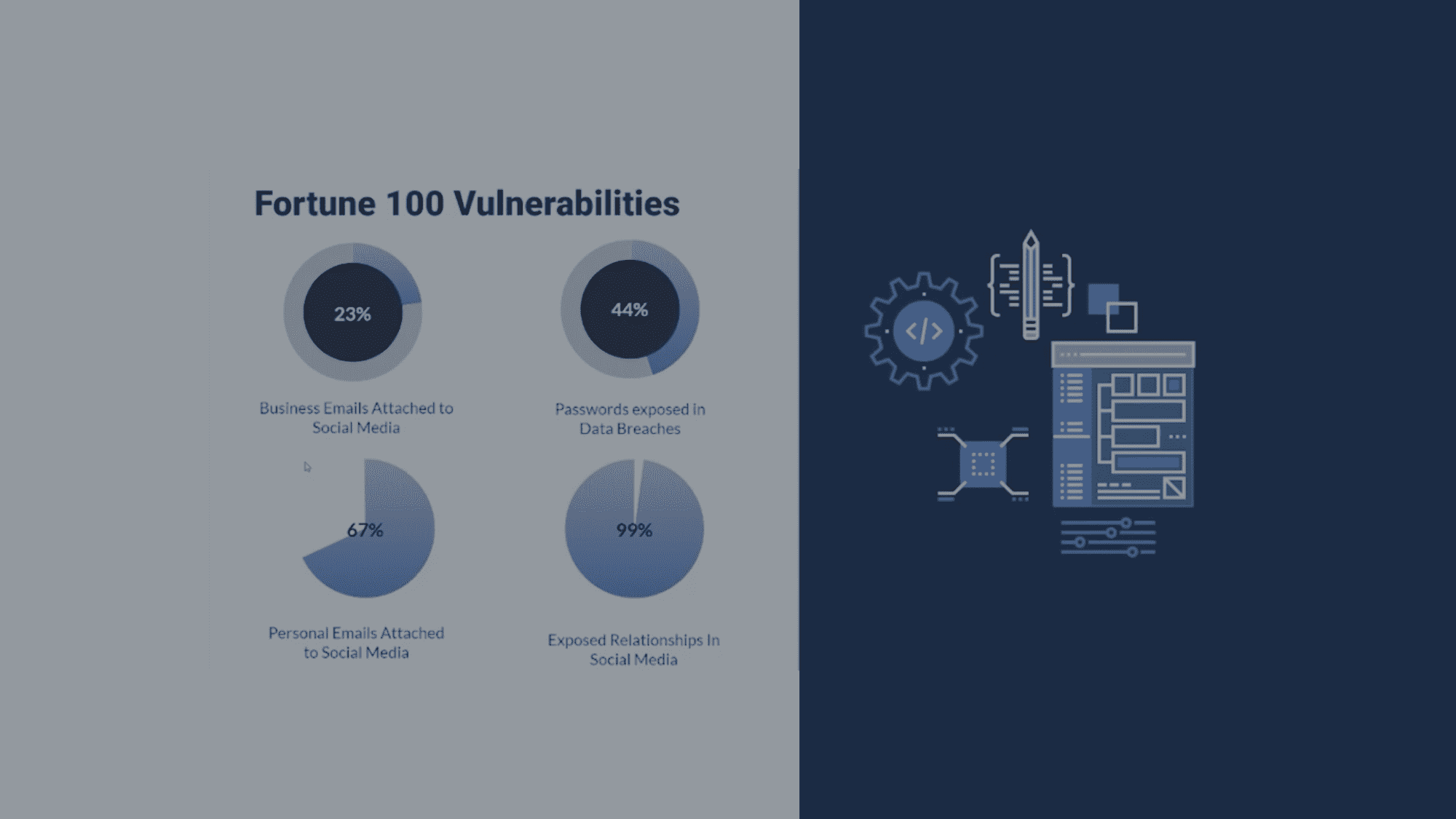

Protecting Fortune 500 Companies with Dark Web Monitoring

In a world where a ransomware attack occurs every 11 seconds, no organization is safe, including the world’s biggest companies.

How Dark Web Data is Used to Spot Malicious Activity

How cyber security organizations use Webz.io powerful dark web data for cyber threat awareness & intelligence.

The Crawled Web Data Dilemma: Build or Buy?

How to acquire the web data you need with a budget you can afford?

How Web Data Powers Predictive Analytics in Finance

Learn how financial institutions are leveraging web data to predict financial market movements.

The Race to Achieve 100% Coverage of Structured Web Data

How to increase your effective web data coverage at any scale? Read it right here.

The Web Data Extraction Playbook

Are you a developer, executive, or researcher? Follow these 5 steps to leveraging the open web as a data source.

How Webz.io Helps Signal Boost Its Threat and Risk Intelligence Solutions

How Signal expanded its OSINT coverage with Webz.io’s structured web data feeds covering millions of sources.

How DataRobot Used Webz.io’s Data to Identify Viral Content

Discover how DataRobot used Webz.io’s data feeds to identify and help viral content without using clickbait headlines.

How Webz.io Helped Keyhole to Monitor Brand Mentions Across the Web

How Webz.io helped Keyhole to monitor mentions of brands with web data feeds from news, blogs and forums.

CrossCheck Uses Webz.io’s Data to Flag Fake News

See how Webz.io’s news data feed powers the add-on that differentiate between fake and fact-checked news.

Mention Widens Financial Monitoring Scope with Webz.io Data Feeds

Learn how Webz.io’s data helps Mention provide customers with key financial insights in real time.

How Notified Used Webz.io’s Data to Dominate Social Monitoring in the Nordics

How Notified used Webz.io’s discussion forum data to become the leading social monitoring solution in the Nordic.

How Webz.io Boosts Kantar Media’s FishEye Social Monitoring Tool

Read how Webz.io’s quality data and coverage boosted Kantar Media’s FishEye Social Monitoring Tool.

Buzzilla Calls Election Results Using Webz.io's Data

Discover how Buzzilla, unlike leading pollsters, called the election using Webz.io's Data.